SYNERGY as a concurrent object oriented language

Adil KABBAJ

INSEA, Rabat, MOROCCO, B. P. 6217

fax : 212 7 77 94 57

email : insea@wizarat-sukkan.gov.ma

Abstract This paper presents the concurrent object oriented model embedded in SYNERGY and an application that illustrates this use of the language.

Basic features of the SYNERGY’ concurrent object oriented model are : 1) an active object, with its message queue, its declarative part and its procedural part (script + body) is described as a labeled context, 2) both “coupled” communication mode (an object communicates directly with another object) and “non-coupled” communication mode (objects share and communicate via a common space) are provided, 3) objects types form a hierarchy used by inheritance mechanism and, 4) both inter- and intra-concurrency are provided ; several objects can be active in parallel and several methods of an object can be executed in parallel.

This paper shows also that the integration of the concurrent object oriented model to SYNERGY is based on the use of key elements as CG structure, contexts and coreferences.

1. Introduction

Various models of concurrent object oriented programming have been proposed in the literature [31, 2, 32, 29, 6]. In general, a concurrent object oriented program is a collection of active objects that can be executed in parallel and that interact by sending/receiving messages to/from each others. An object has a message queue, a declarative part (set of variables that describes the state of the object) and a procedural part called “script”. A script is a collection of triplets <MessagePattern, Constraints, Behavior>. If an object in a steady state has a message in its queue and the message can be unified with one MessagePattern of the script, then the associated constraints are considered and if they are satisfied, the associated behavior is executed and the object becomes in an in-execution state. An object has a lifecycle ; it can be in a steady state, in an in-execution state or in a waiting state (i.e. waiting for a message).

Concurrent object oriented models differ in many ways : a) classes of objects and a hierarchy of classes can be defined and used [24, 22], b) an object can have a body which is different from the script, as the case in POOL family [3], c) execution inside an object is sequential as the case for ABCL language [32] but some models allow for parallel execution inside objects [25, 18, 19], and d) some models use a “coupled” communication mode (an object sends a message to another specified object) while others use a “non-coupled” communication mode (objects share a common space, as the case for a blackboard architecture, and they communicate through this space) [13, 4].

In the companion paper [17] we show how basic elements of the CG theory (especially CG structure, context, canon, coreference and referent expansion operator) form the basis for the multi-paradigm language SYNERGY.

This paper presents the concurrent object oriented model embedded in SYNERGY. An active object, with its message queue, its declarative part and its procedural part (script + body), is described as a labeled context. SYNERGY allows inter- and intra-concurrency ; several objects can be active in parallel and several methods of an object can be executed in parallel. SYNERGY allows for a definition of objects’ classes and of a hierarchy of classes and it provides both “coupled” and “non-coupled” communication modes. Of course, the two modes can be used in a single application.

The paper is organized as follows : section 2 defines the structure of an active object, section 3 concerns inheritance in SYNERGY, section 4 defines “messages dispatcher” and “method manager” operations, section 5 defines the “coupled” communication operations (Send, SendSync, Receive and WaitMessage), section 6 defines the “non-coupled” communication operations (put, getw, get, seew and see) and section 7 gives an example of a concurrent object oriented application developed with SYNERGY. Related works are then considered in section 8. Finally, section 9 summarizes the paper and outlines some future works.

2. The structure of an active object in SYNERGY

An active object is described by a context (Figure 1) that contains a concept for the message queue, a concept for the “super-concept” [SuperType :super], a declarative part, a body and a script parts. The last three components are represented as CGs. The script of an object is composed of methods and of a call to the MessageDispatcher operation which dispatches incoming messages to the object methods (MessageDispatcher is considered in section 4). A method (or a service) is described as a context composed of three concepts (Figure 1) : [MethodMgr :super # ?], [MessagePattern] and [BehaviorMtd :behavior]. MethodMgr is a predefined type considered in section 4, the referent description of [MessagePattern] is the message pattern associated to the method and BehaviorMtd is a defined type that specifies the constraints and the behavior of the method. In addition, each method has its proper message queue treated by the MethodManager (MethodMgr) of the method.

For each method Mtdj, the user should specify its message pattern and define its behavior (i.e. define the type BehaviorMtdj).

![]() Note :

in diagrams, a rectangle with a ``colored surface``

represents a concept with state ``trigger ; ?``.

Note :

in diagrams, a rectangle with a ``colored surface``

represents a concept with state ``trigger ; ?``.

The procedural semantic of an active object includes object inheritance, object management (with MessageDispatcher and MethodManager) and object communication. These elements are introduced in sections 3-6. However, an outline of this procedural semantic can be stated as follows : once an active object receives a message M, the object’ MessageDispatcher looks for a method that can process the message M (i.e. M unifies the message pattern of the method). If such a method is found then a copy of the message M is inserted in the message queue of the method, otherwise a copy of the message M is send to the super-concept of the object. When a method is activated, it will consider in sequence, all the messages of its message queue.

3. Inheritance in SYNERGY

Inheritance is an important abstraction mechanism based on the use of the class-object hierarchy. A class inherits both the declarative and the procedural parts of its super-classes. When a reference is made to an element of the declarative part of an object and the element is not found in the description of the object, then a search of the element is done in its super-classes. Also, when an object receives a message and it can’t be handled by any method of the object, the message is send to the super-class of the object.

In SYNERGY, declarative (or attribute) inheritance is considered as a special case of coreference resolution [15]. Procedural (or method) inheritance in SYNERGY is considered in the following section.

4. Message dispatcher and method manager

The two operations ``MessageDispatcher`` and ``MethodManager`` enable a decentralized treatment of object’ methods ; each method evolves according to its behavior and to the content of its message queue.

The primitive operation MessageDispatcher has the object’ message queue as input and the object’ methods as outputs (Figure 1). The operation is triggered when new messages are inserted in the object’ message queue and it determines which methods could process the new messages. MessageDispatcher considers each new message NewMsg and tries to unify a copy of NewMsg to a copy of a method’ MessagePattern. If the unification succeeds with the MessagePattern of a method M, then the unified copy is inserted in the queue of the method M, otherwise a copy of NewMsg is send to the super-concept of the object. In both cases, the message NewMsg remains in the object’ message queue until it is retrieved by a Receive or a WaitMessage operation.

The defining graph of MethodMgr (for MethodManager) depends on its context which is the description of a method (Figure 3). The defining graph of MethodMgr contains a concept for the queue of the method : [Queue :queue], a reference to the behavior of the method : [Behavior :behavior.] and a concept for the current message : [Message :message]. MethodMgr executes an iterative treatment : at each cycle it considers a message from the method’ queue and activates the method’ behavior (Figure 3). Thus, if the queue of the method is not empty, the operation RemoveHead removes the head of the queue and assigns it as a new value for [Message :message]. The “next” relation ( [RemoveHead]-next->[:behavior.] ) will propagate the activation to the behavior of the method. When the method’ behavior is terminated, the other “next” relation ( [:behavior.]-next->[RemoveHead] ) will re-trigger the RemoveHead operation which checks first that the queue is not empty (i.e. the predicate [IsNotEmpty] is a precondition for [RemoveHead], specified by the guard relation “grd,f”). For more details about the definition of MethodMgr, the reader is refereed to [15].

Figure 3 : Defining graph of MethodMgr and its context

5. “coupled” communication mode

Communication between active objects is “coupled” if objects know each others ; if objects send messages to other specified objects. This section presents the “coupled” communication operations provided by SYNERGY : Send, SendSync, Receive and WaitMessage. The definition of these operations is based on the use of compound coreference, especially the reference, in the context of an object, to the message queue of another object. Moreover, coreference is used to read, write or update the description/value of the refereed concept.

Before defining the above operations, let’s consider first the structure of a message (Figure 4) : it has an identifier, a content, a sender, a receiver, a type (inform, request,...) and a date. Other structures can be defined for a message, depending on the application.

Figure 4 : The structure of a message

![]()

: The operation Send (Figure 5) inserts a message in the message queue of a receiver and terminates its execution, without waiting for a response.

When a message is inserted in the message queue of an object O, all the WaitMessage operations that concern the object O and that are waiting for a message should be aware of that insertion. This is done in SYNERGY by triggering the message queue after a Send (more precisely, the “next” relation in Figure 5 is used to trigger the messageQueue after the insertion operation). Since the WaitMessage operations have input arguments that are in coreference with the message queue of the object O, a forward propagation of the activation will be done from the message queue to the WaitMessage operations, through the coreference links.

Figure 5 : Definition of Send

: The SendSync (Figure 6) operation sends the message to the receiver, the sender is identified from the description of the message and then the sender will wait for a response from the receiver.

Figure 6 : Definition of SendSync

Figure 7 gives an example of a SendSync : the agent Anne sends a message to the agent Jo asking him to move his legs and then she waits for a response from Jo, concerning this request.

Figure 7 : An example of SendSync

![]() :

The Receive primitive searches in the queue for a message that can be

unified with its first argument, if such a message is found, it is retrieved

from the queue and the operation returns “true”, otherwise it returns “false”.

Then, the operation terminates its execution (in both cases).

:

The Receive primitive searches in the queue for a message that can be

unified with its first argument, if such a message is found, it is retrieved

from the queue and the operation returns “true”, otherwise it returns “false”.

Then, the operation terminates its execution (in both cases).

![]()

: Once activated, the operation WaitMessage (Figure 8) will remain so until the queue receives a message that can be unified with its first argument.

Let’s consider how the continues activation of WaitMessage is achieved, even if there is no “real” activation ; the operation is just waiting (Figure 8) : the concept [Boolean :active #!@] is initiated at state “in-execution ; !@” and it will remain so until its state is modified by a specific operation (i.e. SetState). The concept [Boolean :active #!@] enables to keep [WaitMessage] active : once a context [WaitMessage] is active, it will remain so as long as the concept [Boolean :active #!@] is active.

To consider the definition of WaitMessage in more detail, assume that the following call to WaitMessage is nested in an active context C : [Message]—in1à[WaitMessage]ßin2—[Queue :ActObj.messageQueue]. When a new message is inserted in ``messageQueue`` of an active object ``ActObj``, the concept [Queue :messageQueue] in the description of [ :ActObj] will be triggered and this state will be propagated, through the coreference link, to [Queue :ActObj.messageQueue]. Now, this concept is in coreference with [Queue :in2/], the second input parameter of the procedure WaitMessage (Figure 8), so the concept [Queue :in2/] will be triggered too (once [WaitMessage] is activated). Next, the relation “in2” will propagate the activation, from [Queue :in2/] to the concept [Receive] (Figure 8). If the operation Receive returns “false”, WaitMessage will wait for another insertion, otherwise the concept [Inactivate] will be triggered and activated. Inactivate is a predefined type that uses “SetState” primitive to set the state of a concept to ``steady ; o`` (the referent of the concept should be given as the argument of Inactivate). Thus, in our case, Inactivate will set the state of the concept [Boolean :active #!@] to “steady ; o” and so, the continues activation of WaitMessage will terminate.

Figure 8 : Definition of WaitMessage

6. “non-coupled” communication mode

In some applications, active objects don’t know each others, they communicate indirectly via a common space. An active object can read, write or delete a data from the common space. An object will put a message in the common space, instead of sending it to a specific target object. Also, an object that needs some information, will consult the common space instead of looking in its messageQueue (that the object should have).

The use of a blackboard is an example of “non-coupled” communication [13]. Also, three languages families are based on this communication mode : 1) an “imperative” family where objects of a common space are tuples, as the case of Linda [4], 2) a “logical” family where objects of a common space are terms [5] and 3) a “constraint” family where objects of a common space are constraints [14, 26].

In this section, we consider briefly the case of Linda and then we present the implementation of this approach in SYNERGY.

Linda is a coordination language that allows a sequential language (as C or Pascal) to formulate concurrent processes that share and communicate information through a “tuple space”. A tuple is of the form <tag, expr1, ..., exprN> where “tag” is an identifier and an exprj a data (integer, boolean, etc.) or an expression (a function call for instance). Thus, a tuple can be passive or active, an active tuple can be evaluated and it can initiate execution of concurrent processes.

To communicate information and to synchronize actions via a tuple space, processes use four basic operations : “out t” (t represents a tuple) evaluates the fields of the tuple t and puts t in the tuple space, “in t” extracts from the tuple space a tuple that can be matched with t. If no such a tuple exists, the process that executed the operation will wait. The operation “rd t” is similar to “in t” except that it doesn’t extract the tuple from the space. The operation “eval t” evaluates the tuple’ fields. Two other operations, “inp” and “rdp” are provided by Linda and correspond respectively to “in” and “rd” without the waiting condition.

Let’s consider now how such a “non-coupled” communication mode is implemented in SYNERGY. The user can define several common spaces (instead of only one common space). A common space is viewed as an object with a message queue and active objects communicate by sending/receiving to/from the common space.

The type CommonSpace is defined as follows : [CommonSpace : _X = [Queue : messageQueue] ] ; the defining graph of CommonSpace is a message queue. A specific common space SpaceName should be declared as follows : [CommonSpace : SpaceName].

The following ``non-coupled`` communication operations are provided by SYNERGY :

![]()

: The operation Put adds a message in a common space. This operation corresponds to the Send operation where the target object is the common space.

: The operation Get returns ``true`` if it extracts from a common space an element (primitive data or CG) that matches its first argument, and it returns ``false`` otherwise. If the space doesn’t contain such a data, Get will not wait. Get corresponds to Receive operation, on the message queue of the common space.

![]() :

The operation Getw looks for an element that matches its first argument.

If it is found, the element is extracted from the space, otherwise the operation

will wait until a new element is added to the space and it matches the first

argument of the operation. Getw corresponds to WaitMessage, on the message queue

of the common space.

:

The operation Getw looks for an element that matches its first argument.

If it is found, the element is extracted from the space, otherwise the operation

will wait until a new element is added to the space and it matches the first

argument of the operation. Getw corresponds to WaitMessage, on the message queue

of the common space.

: The operation See is similar to Get except that it doesn’t extract the data from the common space.

![]() :

The operation Seew is similar to Getw except that it doesn’t extract the

data from the common space.

:

The operation Seew is similar to Getw except that it doesn’t extract the

data from the common space.

7. Application : Visual agent-oriented modeling of the Intensive Care Unit

In the context of an intelligent tutoring system project [16], SYNERGY has been used for a visual agent-oriented modeling of the Intensive Care Unit (ICU), focusing especially on the patient' assessment task, performed by a nurse, and on the interaction between the two agents (the patient and the nurse). During the modeling process, we became more and more aware of the potential role of SYNERGY as a tool for ``task cognitive analysis``. Indeed, during the formulation and simulation with SYNERGY, corrections, refinements and extensions have been added to the initial model.

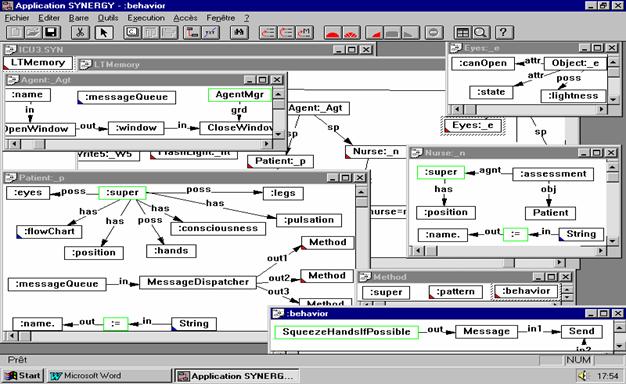

Figure 9 shows a part of the long term memory (the knowledge base or canon) for the ICU application : definitions of types Agent, Nurse, Patient and other related types. The definition of the type Agent is part of the built-in generalization graph provided by SYNERGY upon a creation of a new application. An Agent (Figure 9, window Agent:_Agt) has a messageQueue, a name, a dialog' window and an agent' manager.

A double-click on a concept produces a window that contains more detail about the concept.

The types Nurse and Patient are specialization of the type Agent. Nurse (Figure 9, window Nurse:_n) is an agent with a spatial position and is responsible of patient' assessment task which corresponds to five system evaluations (nervous, respiratory, circulatory, digestive and urinary). At the structural level, Nurse is an active object with a super-concept and a body (the assessment task). It hasn’t a script however. In the description of Nurse (Figure 9, window Nurse:_n), states of the two concepts [Agent :super] and [ :=] are initiated to ``trigger ; ?`` (it doesn’t appear in Figure 9 because a concept in state ``trigger ; ?`` is represented by a green rectangle).

The type Patient (Figure 9, window Patient:_p) is an agent with components like hands, legs and eyes. ``Practical`` definitions are used for them. For instance, Eyes is defined as an object with three attributes (Figure 9, window Eyes :_e) : lightness, state (which can be ``open`` or ``close``) and canOpen (which can be ``true`` if the eyes can be opened and ``false`` otherwise).

A patient can respond to three types of message' patterns (Figure 9, window Patient:_p) : "open eyes", "move legs" and "squeeze hands". A behavior is associated to each pattern. For instance, if the patient receives the message "open eyes", the procedure "OpenEyesIfPossible" will be activated. This later specifies that if the patient can open his eyes (i.e. the attribute canOpen of Eyes is true), a delay (implemented by the predefined operation WaitFor) will occur that simulates the time required for the patient to open his eyes, and then the state of his eyes will be set to "open".

If the patient receives the message "squeeze hands", the following behavior will be activated (Figure 9) : execute the procedure "SqueezeHandsIfPossible" and then send its result (``canSqueezeHands`` or ``cannotSqueezeHands``) as a message to the nurse.

At the structural level (Figure 9, window Patient:_p), Patient is an active object with a super-concept, a descriptive part, a message queue, a script and a body (the affectation instruction). In the description of Patient, states of the two concepts [Agent :super] and [ :=] are initiated to ``trigger ; ?``

Figure 9 : Definitions of some types of the ICU’ application

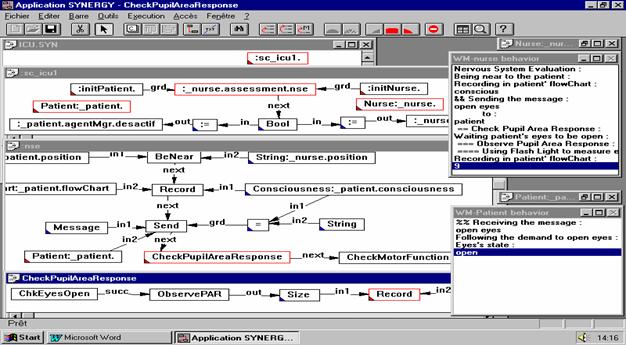

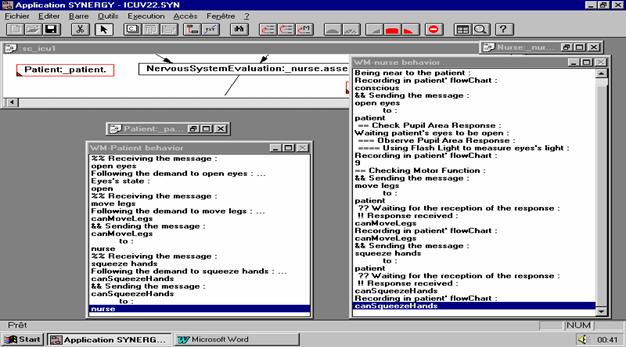

Once the ICU' model has been defined, different scenarios (or situations) can be specified and simulated. For instance, the scenario sc_icu1 (Figure 10, window sc_icu1) specifies the activation of a patient, a nurse and a request to the nurse to evaluate the patient’ nervous system. In another scenario (not specified in this paper), the nurse is requested to evaluate both the nervous and the circulatory systems, this later requires the use of a Multi Channel Monitor to check several parameters. SYNERGY is used, concerning this scenario, to describe and simulate differents ``entities`` like agents (with communications abilities), tasks and devices. Another scenario involves the physician as a third agent, with his diagnosis expertise (SYNERGY is thus used to describe and simulate another type of procedural knowledge).

Let’s consider now in more detail the first scenario sc_icu1 (Figure 10, window sc_icu1) : the two concepts "initPatient" and "initNurse" put the agents Patient and Nurse in particular states (i.e. they assign specific values to some of the attributes of the two agents). For instance, ``initPatient`` specifies that : patient’ eyes can be opened (canOpen = true) and their current state is "close", patient’ consciousness is "conscious", he can move legs, he can squeeze hands, etc.

To activate the scenario sc_icu1, a request is inserted in the Working Memory (Figure 10, window ICU.SYN). The request is a reference to the defining node of sc_icu1 with the state ``trigger ; ?`` : [Scenario :sc_icu1.]. Upon the activation of SYNERGY’ interpreter and since the concept [Scenario :sc_icu1.] is at state ``trigger ; ?``, its description is determined (by coreference resolution) and then activated. Hence, a patient and a nurse will be ``created``, initialized to a specific state (by "initPatient" and "initNurse") and then activated.

The first action of the two agents is to create a dialog’ window (Figure 10, windows "WM-patient behavior" and "WM-nurse behavior") where comments are written as the agents’ behavior proceed.

Remark : The action of opening dialog’ window isn’t specified directly in the description of Patient and Nurse, it is rather ``inherited`` by the two agents from Agent description. Indeed, let’s consider for instance the case of a Nurse (a similar treatment occurs for a Patient) : the description of a Nurse contains the concept [Agent :super] with its state initiated to ``trigger ; ?`` (see Figure 9 for the description of the types Nurse and Agent). When a Nurse is activated, the concept [Agent :super] becomes active too, involving the creation and the opening of a dialog window. The activation of a Nurse will involve also the activation of the concept [ :=] since its state has been initiated also to ``trigger ; ?``. The assignment of a value/description to a bound node (as [String :name.] in the context of [Nurse]) is in fact an assignment to the defining node (the defining node of [String :name.] is the concept [String :name] specified in the context of [Agent :super]).

Activation of [ :=] will be propagated, through ``out`` relation, to the concept [String :name.] and the activation of this later will be propagated, through the coreference link, to the concept [String :name] specified in the context

[Agent :super]. Lastly, the activation of [String :name] will be propagated, through ``in`` relation, to the concept [OpenWindow], involving a creation and an opening of a window with a specific name.

Let’s return now to the scenario sc_icu1 : after the creation, initialization and activation of the patient and the nurse, this later is requested to evaluate the patient’ nervous system (NSE) ; [NervousSystemEvaluation :_nurse.assessment.nse]. The task ``nervous system evaluation`` is described in Figure 10 (window :nse). First, the nurse has to stand near the patient and record patient’ consciousness in patient’ flowchart. If the nurse’ position is different from the patient’ position, the nurse must first move toward the patient (this is basically the definition of BeNear procedure). In the case of the scenario sc_icu1, the nurse and the patient have the same position "center" and the patient is conscious.

The first lines in the dialog window of the nurse (Figure 10, window "WM-nurse behavior") shows the ``trace`` of the two actions (BeNear and Record).

Figure 10 : Execution of the scenario sc_icu1

Next, the nurse has to send a message to the patient asking him to open his eyes (in order to check the patient’ pupil area response). Of course, the nurse will ask the patient only if he is conscious (see Figure 10, window :nse for the formulation of this condition on the Send operation), otherwise the message will not be send and the next two checking tasks will not be executed. The two dialog' windows show the result of this interaction (Figure 10).

After sending the message to the patient, the nurse will check the patient’ pupil area response (Figure 10, the task "CheckPupilAreaResponse") : first, the nurse has to wait until the patient opens his eyes (this waiting step is done by the sub-task "ChkEyesOpen" in "CheckPupilAreaResponse"). In the case of our scenario, after a period of time, the patient will open his eyes, enabling the nurse to observe the patient’ pupil area with a flash light (this is done by the sub-task ``ObservePAR`` in "CheckPupilAreaResponse") and then to record the result of the observation in the patient’ flowchart. The task "CheckPupilAreaResponse" is now terminated.

The dialog window of the nurse shows the result of the execution of this task.

Next, the nurse checks patient’ motor function (i.e. the task "CheckMotorFunction") : she asks first the patient to move his legs, waits for the response from the patient and then, records the result in patient’ flowchart. The nurse will repeat the same behavior for the message "squeeze hands" (see the two dialog windows in Figure 11).

Figure 11 : Execution of the scenario sc_icu1 (continued)

With the termination of the task "CheckMotorFunction", the execution of the task ``nervous system evaluation`` as well as the whole scenario are terminated.

8. Related works

At our knowledge, no other work has been done to use CG as a basis for a concurrent object oriented language. In the context of a ``classical`` concurrent object oriented model (as the Actor model of Hewitt [1]), Nagle [23] proposed the use of CG to represent the content of a message. A similar proposition has been advanced by Haemmerlé [10] in the context of a distributed architecture. A distributed application (called multi-agent system) is composed of processes (C++ programs that are considered as agents) that exchange messages, represented by CGs, and that share a knowledge base of CGs.

In the context of problem solving and knowledge acquisition, Lukose and al. [20, 21] proposed ``Executable Conceptual Structures`` to describe and simulate actions and plans. Actions are expressed with an heterogeneous object oriented notation. Indeed, actions form a hierarchy and their execution can exploit the inheritance mechanism. An action has a descriptive part, i.e. a CG that specifies essential properties that must hold for the action, and a prescriptive or procedural part, i.e. a ``script`` that realizes the action. A script, expressed with a Prolog-based data structure, is formulated as an object with a STRIPS-like semantic : it has a message handler and a set of methods that can respond to different messages and it has also (as an action in a STRIPS-like system) a set of CGs representing the ``precondition``, ``postcondition`` and ``delete`` lists.

Executing an action is initiated by sending a message to the script of the action. First, the preconditions are considered, if they are satisfied then an appropriate method is executed and next, the postconditions are produced.

``Executable Conceptual Structures`` can’t be considered as a general purpose CG-object oriented language. As noted by Lukose in [20], the design constraints concerning actions have been adopted to allow a construction of a more complex structure, called ``problem map`` (basically a sequence of actions) that is developed to satisfy some requirements of a knowledge elicitation technique. This later considers for instance, preconditions and postconditions of actions to construct a problem map.

Works have been done, in CG community, to map object-oriented concepts in CG [12, 30] or to use CG as a logical foundation for object-oriented systems [28, 8].

Hines proposed in his master’s thesis [11] an initial mapping from concepts in the object-oriented paradigm to CG. As noted by Hines and al. [12], the proposition was rudimentary and straight-forward (as the mappings of class to type definition, instance to individual and inheritance to type hierarchy). The proposition was rudimentary concerning especially the treatment of methods and the interpretation of messages ; they proposed to represent methods as Sowa’s actor graphs, but no other precision was given, for instance ``how access methods are treated ?`` (like get an attribute’ value of an object or put an attribute value) and ``how a method that isn’t a mathematical expression could be formulated as an actor graph ?``.

A more precise account of such a mapping is provided in the master thesis of Ghosh [9] who proposed a groundwork for deductive object oriented databases based on CG. The declarative part of a class is represented by a type definition while the class’s methods are defined as schemata of the type. As an example, the author gave the definition of the method ``Age`` as a schema of the type PERSON. A message or method call is represented as a CG with an actor, prefixed by the type of the receiving object (i.e. <PERSON:DIFF_DT>). Method execution is similar to the approach proposed by Sowa for answering database queries [27] : once the schema that defines the actor is found (the search uses inheritance hierarchy, starting with the schemata of the type that prefixes the actor’ name) and the query is joined with the schema, the resulted CG (with actors) is then activated (as described by Sowa [27]). A schema can contain calls to other methods which are treated in the same way as the initial query.

Like Hines and al., Wuwongse and Ghosh [30] define methods as Sowa’s actor graphs (more precisely, as schemata with bound actors) and so, they restrict methods to mathematical expressions. Also, to define a method, the user expects the use of a definition statement (like define Age ...) not the specification of a schema (like schema for PERSON), and to call a method, the user expects the use of the name of the method (like Age), not the name of an actor specified in the schema (like DIFF_DT). But more important is the consequence, on method’ execution, of defining methods as schemata : instead of looking for the method’s definition as in usual object oriented systems (i.e. looks for the definition of Age), a search is done, in the approach of Wuwongse and Ghosh, for a schema that could be joined with the message and in general, several schemata could be joined (for example, a schema that compute the age of a person, or a schema that compute the period (in term of years) that a person spent in a job or in a residence, etc.).

As noted by Wuwongse and Ghosh [30], much work has to be done over the above groundwork, to get a complete operational database system.

Sowa proposed CG as a logical foundation of object-oriented systems [28]. His formulation is based on : a) the notion of context (used as an abstraction mechanism), b) the logical formulation of methods and hence, c) the use of inference rules for method execution.

Sowa considered a method as a process that consists of a single ``If Conditions Then Conclusions`` where Conditions and Conclusions are CGs. For example, the body of the method START-ENGINE is the following rule (stated in English) : ``if, at the current time, the engine of the car is not running and the ignition is turn on, then, after 5 sec., the engine is running at speed of 750 rpm``.

A message is considered as an assertion (for instance, ``the ignition of the car is turn on``). Inference rules are then used to ``execute`` (or prove) the message.

The proof is manual (i.e. directed by an external agent ; Sowa) and in addition, some steps aren’t considered in detail like the combination of an exported information, from the nested context, with the information of the outer context. For instance, in the nested context of Sowa’ example, there is the statement ``st1`` :

[ENGINE : *e]->(STAT)->[RUNNING]->(CHRC)->[SPEED : @750rpm] which is exported and combined with ``st2`` : [ENGINE : *e]->(STAT)->[~ RUNNING]. According to Sowa, the result is : [ENGINE : *e]->(STAT)->[RUNNING]->(CHRC)->[SPEED : @750rpm]. Why ?, what is really the combination operation ? and what could be the result if the exported statement is ``st2`` and it is combined with ``st1`` ?

In Sowa’s presentation, a method is reduced to a if-then block and some object-oriented concepts aren’t considered, as inheritance, access methods and default. He presented his approach through a simple example and it isn’t clear how it can be generalized and still being efficient.

Ellis’ paper [8] is also centered around a simple example ; Sowa’s example of car and StartEngine. StartEngine method is considered as a relation : [Car] ->(StartEngine)-> [Car], (Sowa viewed a method as a concept) with a state based definition. This later has the form in Figure 12 where PreState, TurnOnIgnition and PostState are CGs that describe respectively ``the car isn’t running and it has ignition``, ``turn on ignition`` and ``the car is running with speed 750rpm and it has ignition``. This state-based formulation is overlaid on the following implication : ``if the pre-state and the event are true, then post-state is true``, which corresponds to : (p Ù q) => r or, in Peirce logic, to :

Ø[ p q Ø[ r ] ]. The overlay is not clear however (Figure 12), since the state-based formulation forms one CG, not three CGs that would correspond to three propositions p, q and r. At the notational level, a non-standard formulation of context is used : the nested context encloses parts of relations (succ and TimeIncrement) while the other parts are in the containing context. A standard formulation could be obtained by using co-referent concepts (for instance, [Event : TurnOnIgnition *x] and [Event : ?x]), Ellis’ proof will be more longer however (the main point of Ellis’ paper is that his new proof takes 4 steps instead of Sowa’s 18 steps).

Figure 12 : State-based definition of the method StartEngine

Now, according to Ellis, sending the message [Car]->(StartEngine)->[Car] to the car [Car : PCXX999] will involve their join, producing : [Car : PCXX999]->(StartEngine)->[Car]. But another result is possible and could be :

[Car]->(StartEngine)->[Car : PCXX999] , it is ignored by Ellis however.

Ellis argues that his approach is more illustrative and more efficient than Sowa’s approach ; it takes 4 steps instead of 18 steps ! The four steps are : 1) replace the relation (for instance, StartEngine) by its definition, 2) apply the modus ponens to deduce r from [(p Ù q) and (p Ù q) => r]. But, as we noted above, the implication isn’t clearly stated in the method’s definition and in addition, the application of the modus ponens isn’t clear : in the message, only the pre-state is asserted (p that corresponds to [Car : PCXX999]), not the pre-state and the event. Also, applying the modus ponens should produce immediately r (r corresponds to the post-state), but this isn’t the case in Ellis’s presentation ; the next two steps of the proof will serve to get r ! The third step is to expand the TimeIncrement relation in order to compute the result and the fourth step is to erase the pre-state and the transition, to keep only the post-state.

Much confusion in Ellis’ proposal results from his combination of the rule (``if the pre-state and the event are true, then post-state is true``) with the state transition formulation. In a previous version of his paper [7], Ellis didn’t use the above rule and his approach consisted in three steps : relation (method) expansion, computation of time constraint (the TimeIncrement relation) and erasure of the pre-state and the transition, to keep only the post-state. The previous version was simpler and clearer.

The main proposition in Ellis’ paper is to define a method as a temporal state transition with a modus ponens interpretation : if the pre-state of an event is asserted then the post-state will be asserted after a specified time interval. The event itself isn’t really considered and a method is reduced to a succession of states and hence, if the initial state is asserted, the final state will be asserted too (after a period of time).

Note : Ellis didn’t propose a strategy to reduce the 18 steps of Sowa’s inference approach to 4 steps, he proposed rather a different formulation of method and method’ execution that require only four steps. Sowa’s approach takes 18 steps because it is based on Peirce inference rules (and coreference resolutions which are avoided by Ellis, since he used a non-standard formulation of context), rather than ``a direct`` modus-ponens.

9. Summary and future works

We presented in this paper the concurrent object oriented model embedded in SYNERGY and we gave an application that illustrates this use of the language.

Basic features of the SYNERGY’ concurrent object oriented model are : 1) an active object, with its message queue, its declarative part and its procedural part (script + body) is described as a labeled context, 2) both “coupled” and “non-coupled” communication mode are provided, 3) objects types form a hierarchy used by inheritance mechanism and, 4) both inter- and intra-concurrency are provided ; several objects can be active in parallel and several methods of an object can be executed in parallel.

Future works include the use of SYNERGY in many concurrent object oriented applications and the development of a multi-media environment for the conception and the simulation of multi-agent systems.

References

1. Agha G. and C. Hewitt, Actors : A Conceptual Foundation for Concurrent Object-Oriented Programming, in B. Shriver B, P. Wegner (eds.): Research Directions in Object Oriented Programming, MIT Press, 1987.

2. Agha G., P. Wegner and A. Yonezawa (Eds.), Research Directions in Concurrent Object-Oriented Programming, The MIT Press, 1993.

3. America P., POOL-T: A Parallel object-oriented language, in [30], 1987.

4. Carriero N., D. Gelernter and L. Zuck, Bauhaus Linda, in [6], 1994.

5. Ciancarini P., Parallel logic Programming using the Linda model of computation, in Banâtre and Le Metayer (Eds), Research directions in high-level parallel programming languages, Springer-Verlag, 1991.

6. Ciancarini P., O. Nierstrasz and A. Yonezawa (Eds.), Object-Based Models and Languages for Concurrent Systems, Springer 1994.

7. Ellis G., Object-Oriented Conceptual Graphs, in Proc. of the Third Intern. Conf. on Conceptual Structures, ICCS'95, Santa Cruz, CA, USA, 1995.

8. Ellis G., Object-Oriented Conceptual Graphs, in Third PEIRCE Workshop, University of Maryland, 1994.

9. Ghosh B. C., Towards Deductive Object-Oriented Databases Based on Conceptual Graphs, Masters Thesis, Division of computer science, Asian Institute of Technology, 1991.

10. Haemmerlé O., Implementation of Multi-Agent Systems using conceptual graphs for knowledge and message representation: The CoGITo Platform, in Proc. of the Third Intern. Conf. on Conceptual Structures, ICCS'95, Santa Cruz, CA, USA, 1995.

11. Hines T., Conceptual Object-Oriented Programming, Master Thesis, Kansas State University, 1986.

12. Hines T. R., J. C. Oh, and M. A. Hines, Object-Oriented Conceptual Graphs, in Proc. of the Fifth Annual Workshop on Conceptual Structures, 1990.

13. Jagannathan V., R. Dodhiawala and L. S. Baum, Blackboard Architectures and Applications, Academic Press, 1989.

14. Janson S. and Haridi S., An Introduction to AKL, A Multi-Paradigm Programming Language, (via WWW), 1993.

15. Kabbaj A., Un système multi-paradigme pour la manipulation des connaissances utilisant la théorie des graphes conceptuels, Ph.D Thesis, DIRO, Université de Montréal, June, 1996.

16. Kabbaj A., K. Rouane and C. Frasson, The Use of a Semantic Network Activation Language in an ITS project, Proc. Third International Conference on Intelligent Tutoring Systems, ITS’96, Montréal, Canada, 1996.

17. Kabbaj A., Contexts, Canons and Coreferences as a basis of a multi-paradigm language, submitted to Fifth International Conference on Conceptual Structures, ICCS’97.

18. Kaiser and al., Multiple Concurrency control policies in an OOP System, in [2], 1993.

19. Liddle S. W., D. W. Embley and S. N. Woodfield, A Seamless Model for Object-Oriented System development, in Bertino E. and S. Urban (Eds.), Object Oriented Methodologies and Systems, Springer-Verlag, 1994.

20. Lukose D., Executable conceptual structures, Proc. First International Conference on Conceptual Structures, ICCS’93, Quebec City, Canada, 1993.

21. Lukose D., T. Cross, C. Munday and F. Sobora, Operational KADS Conceptual Model using conceptual graphs and executable conceptual structures, in Proc. of the Third Intern. Conf. on Conceptual Structures, ICCS'95, Santa Cruz, CA, USA, 1995.

22. Matsuoka S. and A. Yonezawa, Analysis of Inheritance Anomaly in OOCP Languages, in [2], 1993.

23. Nagle T. E., Conceptual Graphs In An Active Agent Paradigm, Proc. of the IBM Workshop on Conceptual Graphs, 1986.

24. Papathomas M., A Unifying Framework for Process Calculus Semantics of COO Languages, in [28], 1992.

25. Saleh H. and P. Gautron, A Concurrency Control Mechanism for C++ Objects, Nishio S. and A. Yonezawa (Eds.), Object Technologies for Advanced Software, Springer-Verlag, 1993.

26. Smolka G., An Oz Primer, (via WWW), 1995.

27. Sowa J. F., Conceptual Structures : Information Processing in Mind and Machine, Addison-Wesley, 1984.

28. Sowa J. F., Logical foundations for representing object-oriented systems, J. of Experimental and Theoretical AI, 5, 1993.

29. Tokoro M., O. Nierstrasz and P. Wegner (eds.), Object Based Concurrent Computing, Springer-Verlag, 1992.

30. Wuwongse V., B. G. Ghosh, Towards Deductive Object-Oriented Databases Based on Conceptual Graphs, in Proc. of the 7th Annual Workshop on Conceptual Structures, 1992.

31. Yonezawa A. and M. Tokoro (eds.), Object-Oriented Concurrent Programming, MIT Press, 1987.

32. Yonezawa A. (ed), ABCL : An Object-Oriented Concurrent System, MIT Press, 1990.